The Electronic Frontier Foundation (EFF) explores concerns around police use of AI to draft reports, warning of potential biases and inaccuracies that can threaten fair legal proceedings.

Main Points:

- Bias and Inaccuracy: AI models can perpetuate existing biases, leading to reports that might disproportionately target certain groups.

- Over-Reliance on Technology: Police may become over-dependent on AI-generated reports, possibly reducing the quality of their own investigative work.

- Lack of Transparency: The closed nature of many AI models can make it hard for defendants to challenge or understand how reports are generated.

Summary:

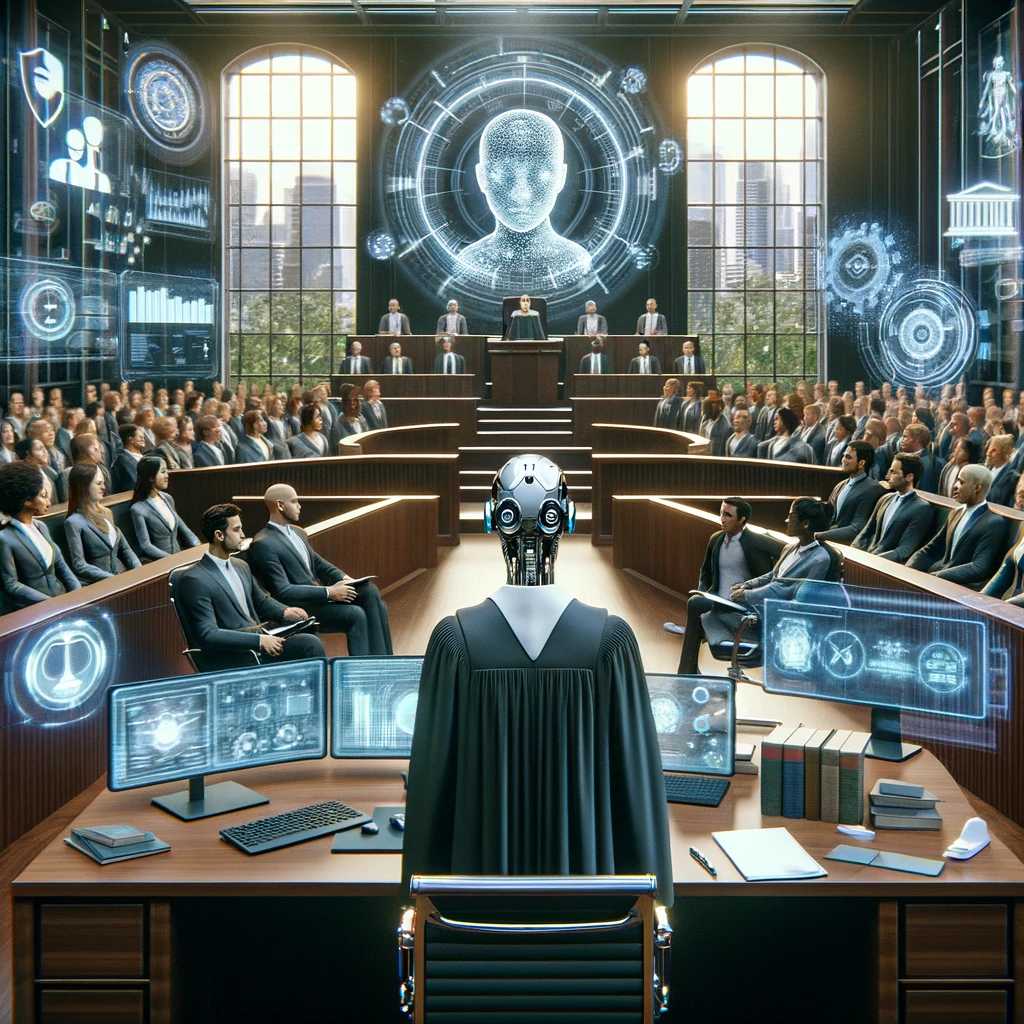

The Electronic Frontier Foundation (EFF) discusses the challenges and risks posed by police use of AI to write reports. One major concern is the potential for bias in AI algorithms, which can result in reports that unfairly target marginalized communities. Additionally, reliance on AI for report generation can reduce officers’ active involvement in investigative work, potentially leading to lower quality investigations and fewer opportunities for the human element in policing.

Transparency is another critical issue, as many AI models are often proprietary and not fully explainable. This can create difficulties in legal proceedings, particularly when defendants and their lawyers cannot thoroughly understand or challenge the technology’s output. Overall, while AI promises efficiencies in policing, the risks it introduces to justice, accuracy, and transparency require careful consideration and regulation.

Source: What Can Go Wrong When Police Use AI to Write Reports?

Keep up to date on the latest AI news and tools by subscribing to our weekly newsletter, or following up on Twitter and Facebook.